13. Nudge techniques

-

Due to the Data (Use and Access) Act coming into law on 19 June 2025, this guidance is under review and may be subject to change. The Plans for new and updated guidance page will tell you about which guidance will be updated and when this will happen.

Do not use nudge techniques to lead or encourage children to provide unnecessary personal data or turn off privacy protections.

What do you mean by ‘nudge techniques’?

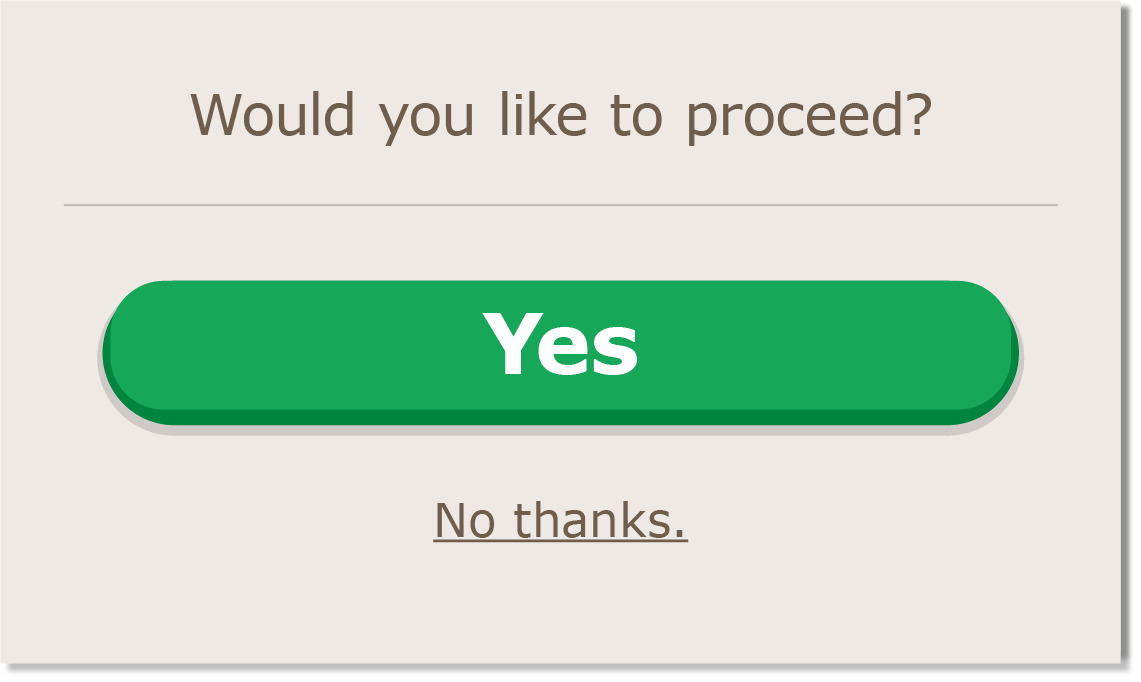

Nudge techniques are design features which lead or encourage users to follow the designer’s preferred paths in the user’s decision making. For example, in the graphic below the large green ‘yes’ button is presented far more prominently then the small print ‘no’ option, with the result that the user is ‘nudged’ towards answering ‘yes’ rather than ‘no’ to whatever option is being presented.

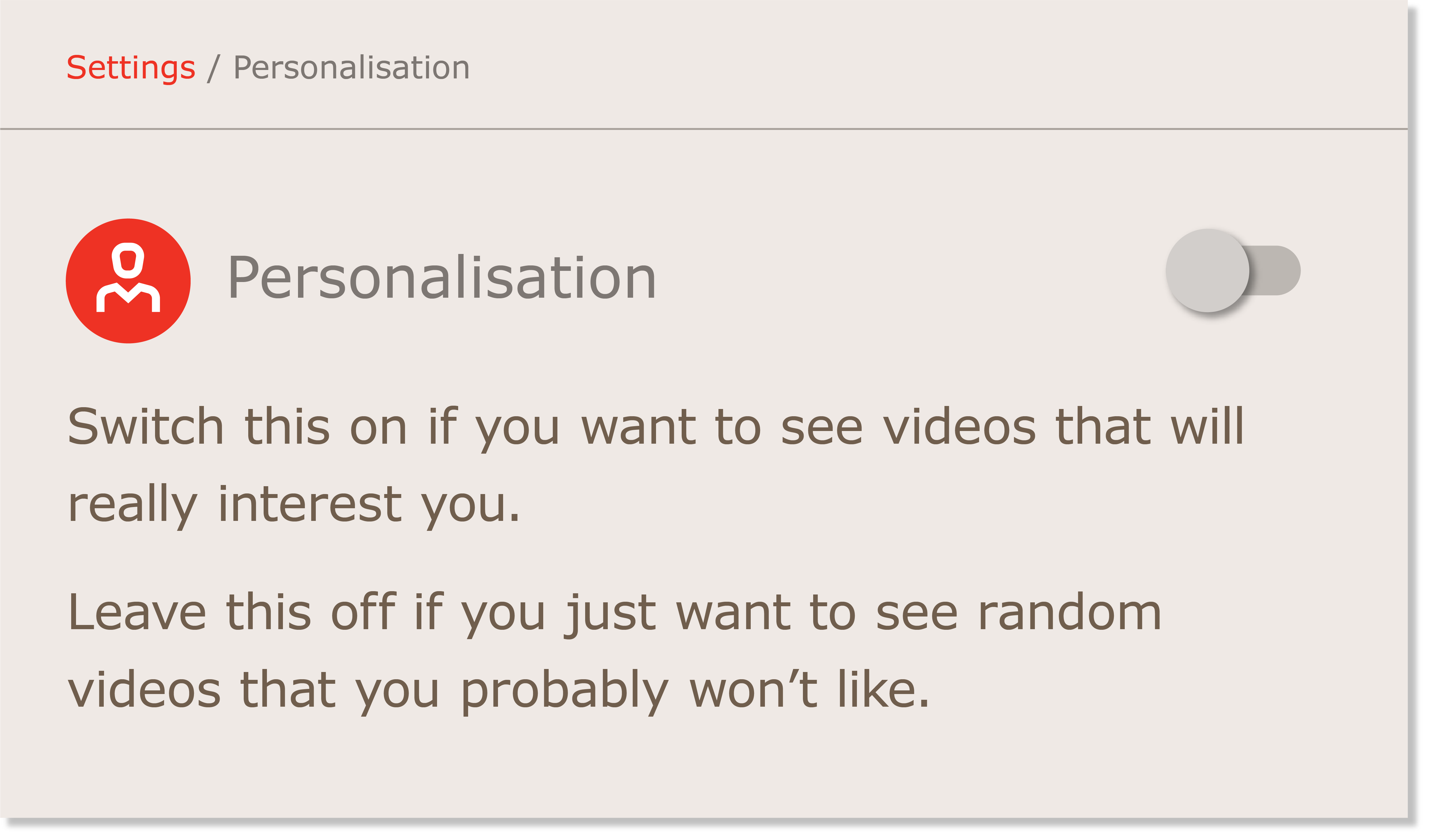

In the next example the language used to explain the outcomes of two alternatives is framed more positively for one alternative than for the other, again ‘nudging’ the user towards the service provider’s preferred option.

A further nudge technique involves making one option much less cumbersome or time consuming than the alternative, therefore encouraging many users to just take the easy option. For example providing a low privacy option instantly with just one ‘click’, and the high privacy alternative via a six click mechanism, or with a delay to accessing the service.

Why is this important?

Article 5(1)(a) of the GDPR says that personal data shall be:

“processed lawfully, fairly and in a transparent manner in relation to the data subject (‘lawfulness, fairness and transparency’)”

Recital 38 to the GDPR states that:

“Children merit specific protection with regard to their personal data, as they may be less aware of the risks, consequences and safeguards concerned and their rights in relation to the processing of personal data…”

The employment of nudge techniques in the design of online services can be used to encourage users, including children, to provide an online service with more personal data than they would otherwise volunteer. Similarly it can be used to lead users, particularly children, to select less privacy-enhancing choices when personalising their privacy settings.

Using techniques based on the exploitation of human psychological bias in this way goes against the ‘fairness’ and ‘transparency’ provisions of the GDPR as well as the child specific considerations set out in Recital 38.

How can we make sure that we meet this standard?

Do not use nudge techniques to lead children to make poor privacy decisions

You should not use nudge techniques to lead or encourage children to activate options that mean they give you more of their personal data, or turn off privacy protections.

You should not exploit unconscious psychological processes to this end (such as associations between certain colours or imagery and positive outcomes, or human affirmation needs).

You should not use nudge techniques that might lead children to lie about their age. For example pre-selecting an older age range for them, or not allowing them the option of selecting their true age range.

Use pro-privacy nudges where appropriate

Taking into account the best interests of the child as a primary consideration, your design should support the developmental needs of the age of your child users.

Younger children, with limited levels understanding and decision making skills need more instruction based interventions, less explanation, unambiguous rules to follow and a greater level of parental support. Nudges towards high privacy options, wellbeing enhancing behaviours and parental controls and involvement should support these needs.

As children get older your focus should gradually move to supporting them in developing conscious decision making skills, providing clear explanations of functionality, risks and consequences. They will benefit from more neutral interventions that require them to think things through. Parental support may still be required but you should present this as an option alongside signposting to other resources.

Consider nudging to promote health and wellbeing

You may also wish to consider nudging children in ways that support their health and wellbeing. For example, nudging them towards supportive resources or providing tools such as pause and save buttons.

If you use personal data to support these features then you still need to make sure your processing is compliant (including having a lawful basis for processing and have providing clear privacy information), but subject to this it is likely that such processing will be fair.

The table below gives some recommendations that you might wish to apply to children of different ages. Although again you are free to develop your own, service specific, user journeys that follow the principle in the headline standard.

You should also consider any additional responsibilities you may have under the applicable equality legislation for England, Scotland, Wales and Northern Ireland.

| Age range | Recommendations |

|---|---|

| 0-5 Pre-literate & early literacy |

Provide design architecture which is high-privacy by default. If change of default attempted nudge towards maintaining high privacy or towards parental or trusted adult involvement. Avoid explanations – present as rules to protect and help. Consider further interventions such as parental notifications, activation delays or disabling facility to change defaults without parental involvement, depending on the risks inherent in the processing. Nudge towards wellbeing enhancing behaviours (such as taking breaks). Provide tools to support wellbeing enhancing behaviours (such as mid-level pause and save features). |

| 6-9 Core primary school years |

Provide design architecture which is high-privacy by default. If change of default attempted nudge towards maintaining high privacy or parental or trusted adult involvement. Provide simple explanations of functionality and inherent risk, but continue to present as rules to protect and help. Consider further interventions such as parental notifications, activation delays or disabling facility to change defaults without parental involvement, depending on the risks inherent in the processing. Nudge towards wellbeing enhancing behaviours (such as taking breaks). Provide tools to support wellbeing enhancing behaviours (such as mid-level pause and save features). |

| 10-12 Transition years |

Provide design architecture which is high-privacy by default. If change of default attempted provide explanations of functionality and inherent risk and suggest parental or trusted adult involvement. Present option in ways that encourage conscious decision making. Consider further interventions such as parental notifications, activation delays or disabling facility to change defaults without parental involvement, depending on the risks. Nudge towards wellbeing enhancing behaviours (such as taking breaks). Provide tools to support wellbeing enhancing behaviours (such as mid-level pause and save features). |

| 13 -15 Early teens |

Provide design architecture which is high-privacy by default. Provide explanations of functionality and inherent risk. Present options in ways that encourage conscious decision making. Signpost towards sources of support including parents. Consider further interventions depending on the risks. Suggest wellbeing enhancing behaviours (such as taking breaks). Provide tools to support wellbeing enhancing behaviours (such as mid-level pause and save features). |

| 16-17 Approaching adulthood |

Provide design architecture which is high-privacy by default. Provide explanations of functionality and inherent risk. Present options in ways that encourage conscious decision making. Signpost towards sources of support including parents. Suggest wellbeing enhancing behaviours (such as taking breaks). Provide tools to support wellbeing enhancing behaviours (such as mid-level pause and save features). |