The ICO’s response to the consultation on the draft guidance for ‘Likely to be accessed’ in the context of the Children’s Code

Introduction

In September 2022, we clarified our position that adult-only services are in scope of the Children’s code (the code) if they are likely to be accessed by a significant number of children. To support Information Society Services (ISS) to assess whether children are likely to access their service, we developed and consulted publicly on the draft guidance.

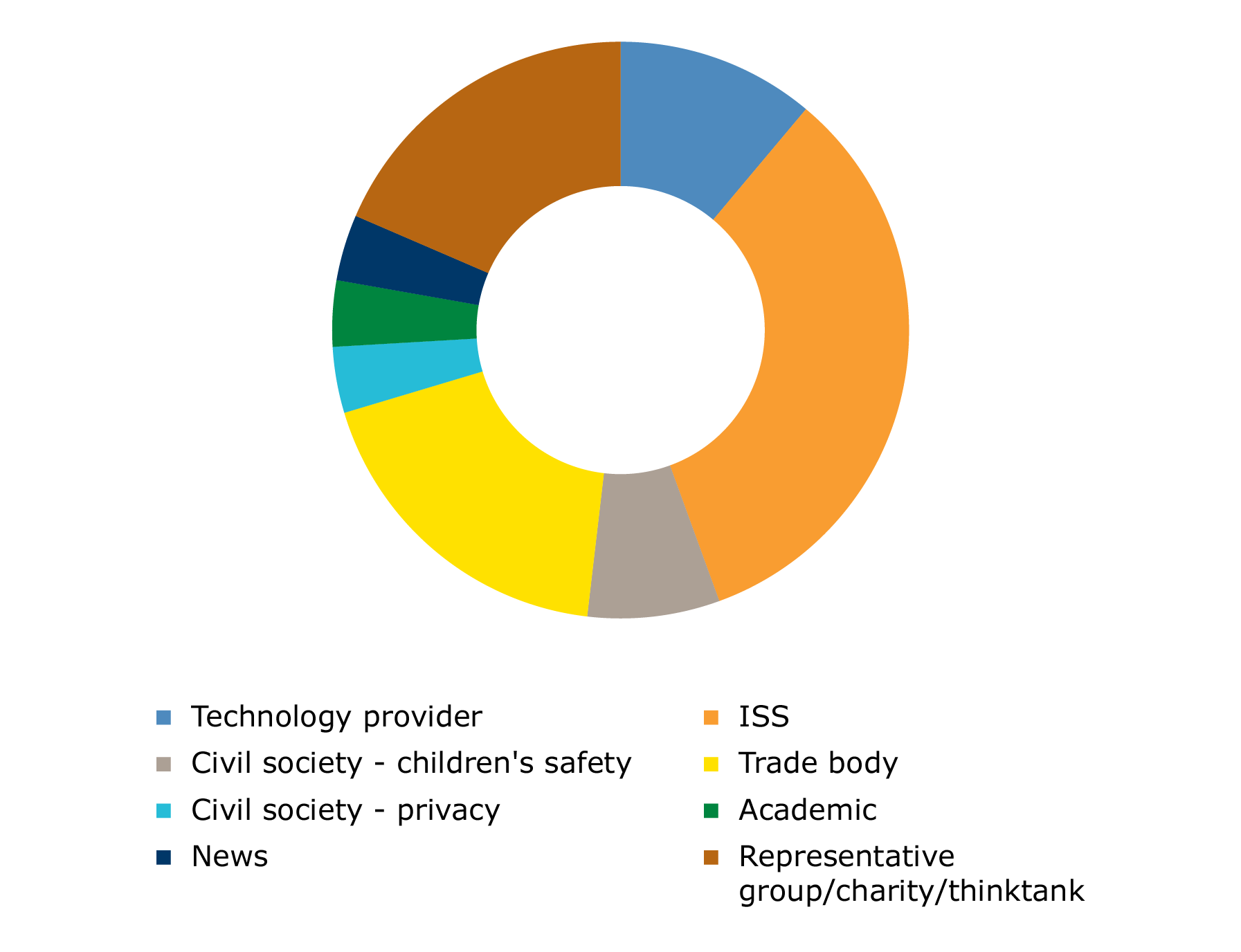

The consultation on the guidance was open for eight weeks between 24 March and 19 May 2023. We received 27 responses, 67% from industry and 33% from civil society groups (as indicated in the chart below). We are grateful to those who took the time to respond.

We have reviewed the responses and, where appropriate, updated the guidance based on this feedback. Some of the thematic updates made to the guidance are outlined in this report. We also explain where it has not been appropriate to update the guidance, including our rationale.

Chart: Stakeholder responses to consultation

Feedback was provided from across sectors. Some overarching themes emerged, which are summarised below:

- Positive feedback on the ICO's commitment to provide regulatory certainty in this area.

- Feedback requesting that the definition of ‘significant’ should include numerical thresholds or baseline percentages.

- The view that there should be more emphasis on necessity and proportionality and applying a risk-based approach.

- Respondents highlighted that the guidance focussed predominantly on high risk processing in the entertainment and leisure sectors, but its scope is wider and applies to other sectors as well.

- The importance of regulatory alignment, and potential risks of regulatory confusion and overburden. Respondents were interested in alignment with the Online Safety Bill (OSB), and referenced the wider, international landscape in relation to data protection law and children’s privacy.

- Requests for guidance on how to approach and prioritise the non-exhaustive list of factors.

- Feedback that a broader range of case studies would be beneficial, including examples of ISS which do not pose high risks or are unlikely to be attractive to children.

- A view from some respondents that the case studies focussed on age gating children from their platforms, rather than reducing their processing risks and complying with the code.

Whilst it is not possible to cover every point in detail, the key themes which emerged are summarised below.

Regulatory Certainty

Summary of stakeholder response:

Many responses were supportive of the ICO’s commitment to provide regulatory certainty in this area and support ISS in their decision making. In total, 63% of responses agreed or strongly agreed that the FAQs were useful for providing regulatory certainty. Although aspects of the guidance were considered to provide regulatory certainty, there was feedback that the definition of ‘significant number’ is unclear.

The ICO’s response:

As outlined in the ICO25 strategic plan, we exist to empower organisations through information. We enable businesses and organisations to use information responsibly and we do this by providing certainty on what the law requires, what represents good practice and our approach as the regulator. Following the clarification of our position on adult-only services that are likely to be accessed by children, we recognised that guidance would both support ISS providers in their assessment of whether children are likely to access their services and provide regulatory certainty on our position in relation to this matter.

We recognise that while some aspects of the guidance, such as the FAQs, were positively received and felt to provide regulatory certainty, there was feedback that further clarification was required regarding the definition of a ‘significant number’. This has been addressed in the following section.

Please see our ICO 25 strategic plan: Our purpose for further information.

Significant number

Summary of stakeholder responses:

59% of responses disagreed or strongly disagreed that the definition of a ‘significant number' was helpful.

The key themes which emerged from the feedback are:

- The definition was subjective and unclear which meant it was open to interpretation.

- A request for a statistical, percentage or threshold baseline approach.

- Concerns that if the definition is open to interpretation, this could lead to organisations reaching a different decision to the ICO in determining a ‘significant number’ and concerns that this could result in regulatory action.

- Concerns that the definition was too broad and therefore would apply to a wide range of ISS providers, even where there isn’t a high risk.

- Feedback from respondents stated that the term ‘de minimis’ is too legalistic and could easily be misunderstood by SMEs.

- The relationship between the definition and the list of factors was also queried as it was viewed as implying a lower threshold than the list of factors.

- There was also a view that the definition does not align with the risk-based approach set out in a DPIA.

- There was a request that the definition should cover services that children use, without extending to include all services that children could theoretically access.

- Finally, there was a view that the threshold should be contingent on several factors related to the type of service, its sector and its design.

The ICO’s response:

We thank respondents for taking the time to outline their position and acknowledge this was an area of concern for a number of respondents across different sectors. We recognise that many respondents, including those who agreed that other elements of the guidance were useful, felt the definition could be improved.

The ICO is a whole economy regulator and as such the legislation covers a diverse range of organisations from SMEs to large corporations. There are a range of factors that will determine what is ‘significant’ including the number of overall users of the service, the number of users that are likely to be children and the data processing risks the service poses to children. For example, a large social media site that uses behavioural profiling for marketing purposes may have a small percentage of child users, but large numbers of children accessing the service and the type of processing may pose a high risk to children. Or a new SME ISS may only have a small number of children accessing its service, but the processing taking place may track the location data of users (including that of children) and make the location public. The high risk of harm to children from the processing means that even a small number or percentage of children would represent a significant number of children in relation to the risk. Numerical thresholds are inflexible and do not take into account the different types of processing being offered by services and the different levels of risk posed by them.

Based on the consultation feedback, we have updated the guidance to include a new FAQ which outlines why we have not set a numerical threshold for assessing a ‘significant number’ of children.

We welcome the commitment made by organisations to meet their data protection obligations. While we have a commitment to providing regulatory certainty, as part of the accountability requirements organisations are ultimately responsible for undertaking their own assessment of how they meet their obligations.

When applying the law, we take a range of factors into consideration. Efforts by organisations to apply our guidance are taken into account, alongside any risks to people’s data rights. Use of our enforcement powers are reserved for circumstances where non-compliance would do the most harm, or where there are repeated or wilful failings to comply with the law.

For further information about how we regulate the code, please see our guidance on: Enforcement of this code, and our regulatory action policy.

Scope, necessity, proportionality, and risk

Summary of stakeholder responses:

Respondents felt that although the guidance applies to any service which is not designed to attract children, but which children could access, the focus was largely on high risk, adult-only ISS from the leisure and entertainment sectors. Many felt that the guidance did not provide sufficient resources for ISS which do not pose high data processing risks to the rights and freedoms of children or sites which children could access but are unlikely to be attractive to them.

Furthermore, as the guidance was drafted with high risk ISS as the target audience, it did not adequately reflect that assessments should be made in a risk based and proportionate way, as is consistent with data protection legislation. Some respondents felt the approach outlined in the guidance may be disproportionate for SMEs or startups which do not present high data processing risks to children.

The ICO’s response:

The guidance was drafted to support ISS following our clarification in September 2022 that adult-only services are in scope of the Children’s code if they are likely to be accessed by a significant number of children. This position was based on an increasing amount of research1 which indicated that children are likely to be accessing adult-only services and that these pose data protection harms. The guidance sought to assist high risk, adult-only services to assess whether a significant number of children are accessing their site in reality, and if so to take appropriate action.

The feedback has highlighted that the scope of the guidance will have a wide reach and is applicable to ISS which do not present a high risk to children and to ISS which children could access, but are unlikely to do so. We have made amendments to the guidance, the FAQ on ‘significant’, the approach to the list of factors and case studies to reflect that assessments should be conducted in a risk based and proportionate way. We have added a case study demonstrating the steps that an ISS that is unappealing to children could take. We have also added a case study showing the approach taken by an ISS which does not pose high data processing risks to children. We are assessing options to update our guidance for SMEs on the code. The current guidance for SMEs can be accessed here.

Furthermore, we are conducting a workstream which seeks to support ISS to understand data protection risks and the harms they can lead to when processing children's data. This project will contribute to guidance for organisations on how to assess risks and harms to children. The work will also be used to raise awareness about these issues with families. Further reading on this topic is available here:

- Age appropriate design code

- Data protection impact assessments

- Children’s Code Self-Assessment Risk Tool

- Information Commissioner’s Opinion on age assurance for the Children’s code

Regulatory Alignment

Summary of stakeholder responses:

Respondents raised the importance of regulatory alignment and cohesion, particularly with Ofcom in line with the requirements of the OSB. The potential for regulatory over-burden and confusion was seen as a risk if regulators do not ensure consistency. There were also criticisms that the guidance at times veered into content moderation.

The ICO’s response:

The ICO has worked closely with Ofcom on the interface between data protection and online safety since Ofcom’s appointment by government as the regulator for the forthcoming OSB. In November 2022, Ofcom and the ICO published a joint statement setting out our ambition to work together on online safety and data protection. The Digital Regulation Cooperation Forum (DRCF) workplan for 2023/24 includes a joint ICO and Ofcom workstream on online safety and data protection, continuing our bilateral work on safety technologies, including age assurance. We have engaged with Ofcom while developing the guidance, with the intention of reaching alignment where appropriate across the two regimes, while reflecting that these regulatory regimes are seeking to do different things.

Clarification has been provided to reflect that the guidance relates to the processing of personal data of children. Any references to ‘high risk’ relate to personal data processing activities.

The ICO and Ofcom joint statement and DRCF workplan are available here: ICO and Ofcom strengthen partnership on online safety and data protection and Workplan for 2023/24 2.

Non-exhaustive factors

Summary of stakeholder responses:

56% of respondents either agreed or strongly agreed that the non-exhaustive list of factors was a useful tool. Responses requested clarity on how to approach the list of factors and suggested that it would be useful to weight the factors according to importance. There were requests for additional factors including gamification and age assurance. Some respondents queried whether one factor alone can bring an ISS into scope of the code.

Concerns were raised that comprehensively assessing the non-exhaustive list of factors would be excessive and disproportionate for SMEs or services which do not present high data processing risks to the rights and freedoms of children. Responses also suggested developing a dedicated resource for SMEs. There was apprehension about how the ICO would regulate against the non-exhaustive list of factors.

We received feedback that the non-exhaustive list of factors delineates between under and over 18s, but not between the different age ranges. Questions were also raised about how to identify appropriate research and ensure its provenance.

The ICO’s response:

The guidance has been updated to reflect that the non-exhaustive list of factors is a supportive resource which provides examples of factors that ISS could consider as part of their assessment. How an organisation approaches its assessment will depend on the data processing risks that the ISS presents. The risks are related to the processing undertaken and not the size of the organisation. Therefore, SMEs also have a responsibility to assess whether a significant number of children are accessing their service, as some SMEs may pose high data processing risks to children and should conduct the assessment accordingly.

We have reinforced that ultimately organisations must demonstrate how they comply with their accountability requirements. Its relevance to the DPIA risk assessment has been also highlighted.

Whether one factor alone can bring an ISS into scope depends on the data processing risks, the type of service and its design. In some circumstances a single factor may provide an ISS clarity on whether a significant number of children are likely to access their service or not. In other cases, an ISS may need to consider a range of factors. The list of factors is a supportive tool to assist ISS in this assessment, however ultimately the ISS is accountable for their decision making.

An FAQ has been added which reinforces our regulatory approach, further information on our position can be found in this document in the, ‘significant number’ section. We are also exploring options to update our SME guidance on the Children’s code to include the likely to be accessed assessment.

Additions have been made to the non-exhaustive list of factors. For example, gamification has been added to the ‘types of content, design features and activities which are appealing to children’ factor. ‘Whether children can access your service’ has been added as a new factor to show that robust age assurance that prevents access is a factor ISS can consider in their assessment. We have also clarified that ISS are responsible for determining the reliability of the research that they consider and be able to justify their approach. This process links to the accountability requirement.

Standard 3 of the code provides advice on the age ranges of children. Read more about Age appropriate application in the Children's code. Further information on the accountability framework can be found here.

Case studies

Summary of stakeholder responses:

59% of responses agreed or strongly agreed that the case studies were helpful and provided positive feedback about the ICO's commitment to use real life scenarios in the guidance. As the case studies predominantly included ISS which pose high data processing risks, concerns were raised that they resorted to restricting children from the platforms, rather than applying the standards of the code. The examples were viewed as too restrictive for many services which do not pose high data processing risks to younger users. Many respondents requested more case studies from a variety of different sectors.

There were requests for the case studies to go further than just assessing whether a ‘significant number’ of children are likely to access a service, and many wanted them to also assess what age assurance measures to use in different scenarios.

The term ‘robust age assurance’ measure was queried, as was the reference to an age assurance ‘page’. Concerns about a ‘chilling effect’ on adults using services, and how to comply with data minimisation when conducting age assurance measures were also raised.

The ICO’s response:

The case studies provide examples of ISS which pose the highest data processing risks to children, and where research indicates that children are likely to access these adult services. The case studies have been updated to reflect more diverse outcomes when an ISS identifies that children are likely to access a service. Rather than restricting access, some case studies now result in the ISS lowering the data processing risks, making the platform appropriate for child users or putting high risk data processing behind an age-assurance system. New case studies have been added for ISS that do not pose high data processing risks. These suggest that after conducting the assessment, only minimal action, if any would be required.

The focus of this guidance is to assist ISS in the assessment of whether children are likely to access a service, so that appropriate steps can be taken as outlined in the code. ISS should refer to our Commissioner’s Opinion on age assurance for further guidance; it will be updated according to our position on likely to be accessed in due course.

Age assurance must be conducted in accordance with data protection principles (eg data minimisation, purpose limitation), and ISS are accountable for choosing the method most appropriate for the data processing risks that their service presents. Further information is available in our Commissioner’s Opinion on age assurance.

References to age assurance ‘pages’ were used to highlight where the page may not be within scope of the code under the circumstances outlined in the guidance. We recognise that there are a range of age assurance measures available, not all of which are conducted on an ‘age assurance’ page.

See our Commissioner’s Opinion on age assurance for further information.

Feedback on potential costs, burdens, impact on harms

We have reflected on feedback on impacts and the impact assessment has been updated accordingly. The updated impact assessment provides more clarity on the methodology used in assessing impacts, as well as more detail on the impacts using a cost benefit analysis framework, and the affected groups we have considered. You can read more in the Likely to be accessed impact assessment.

Next steps

We continue our work on the code through a variety of workstreams to support our delivery of the ICO 25 objective of empowering responsible innovation and sustainable economic growth.

We will promote the guidance and engage with organisations regarding their feedback via stakeholder engagement events which will be arranged in autumn 2023.

We continue to work closely with Ofcom through the DRCF on this area of joint regulatory interest to ensure a cohesive approach.

The Commissioner’s Opinion on age assurance will be updated to reflect the guidance on likely to be accessed in due course.

We have recently published our evaluation of the Children’s code. Within it is a learning point that states the ICO should continue to monitor and evaluate the code and any additional guidance or clarifications related to children’s privacy. This is currently being considered by the ICO’s Children’s Privacy Board.

Please see our ICO 25 strategic plan for further information. Our evaluation of the Children’s code is also available here.

1 From the NSPCC, Microsoft and British Board of Film Classification.

2. See page 8 for Online Safety and Data protection workstream.